Minions: where local and cloud LLMs meet

February 25, 2025

Avanika Narayan, Dan Biderman, and Sabri Eyuboglu from Christopher Ré’s Stanford Hazy Research lab, along with Avner May, Scott Linderman, James Zou, have developed a way to shift a substantial portion of LLM workloads to consumer devices by having small on-device models (such as Llama 3.2 with Ollama) collaborate with larger models in the cloud (such as GPT-4o).

This new paper with accompanying open-source code aims to reduce cloud costs with minimal or no quality degradation in two protocol configurations:

- Minion: cloud model freely chats with a single local model with access to data, until the two reach a solution

- Achieves 30.4x reduction in remote costs, while maintaining 87% of cloud model performance

- MinionS: cloud model decomposes the task into bit-sized subtasks to be performed on chunks of the context. Small LLMs solve these tasks in parallel

- Achieves 5.7x reduction in remote costs, while maintaining 97.9% of cloud model performance

Get started

Clone the repository:

git clone https://github.com/HazyResearch/minions.git

cd minions

Optionally, create a virtual environment with your favorite package manager (e.g. conda, venv, uv, etc.):

python3 -m venv .venv

source .venv/bin/activate

Next, install the Python package and dependencies:

pip install -e .

If you don’t have it yet, install Ollama and Meta’s Llama 3.2 model:

ollama pull llama3.2

Lastly, create an OpenAI API Key for the cloud model.

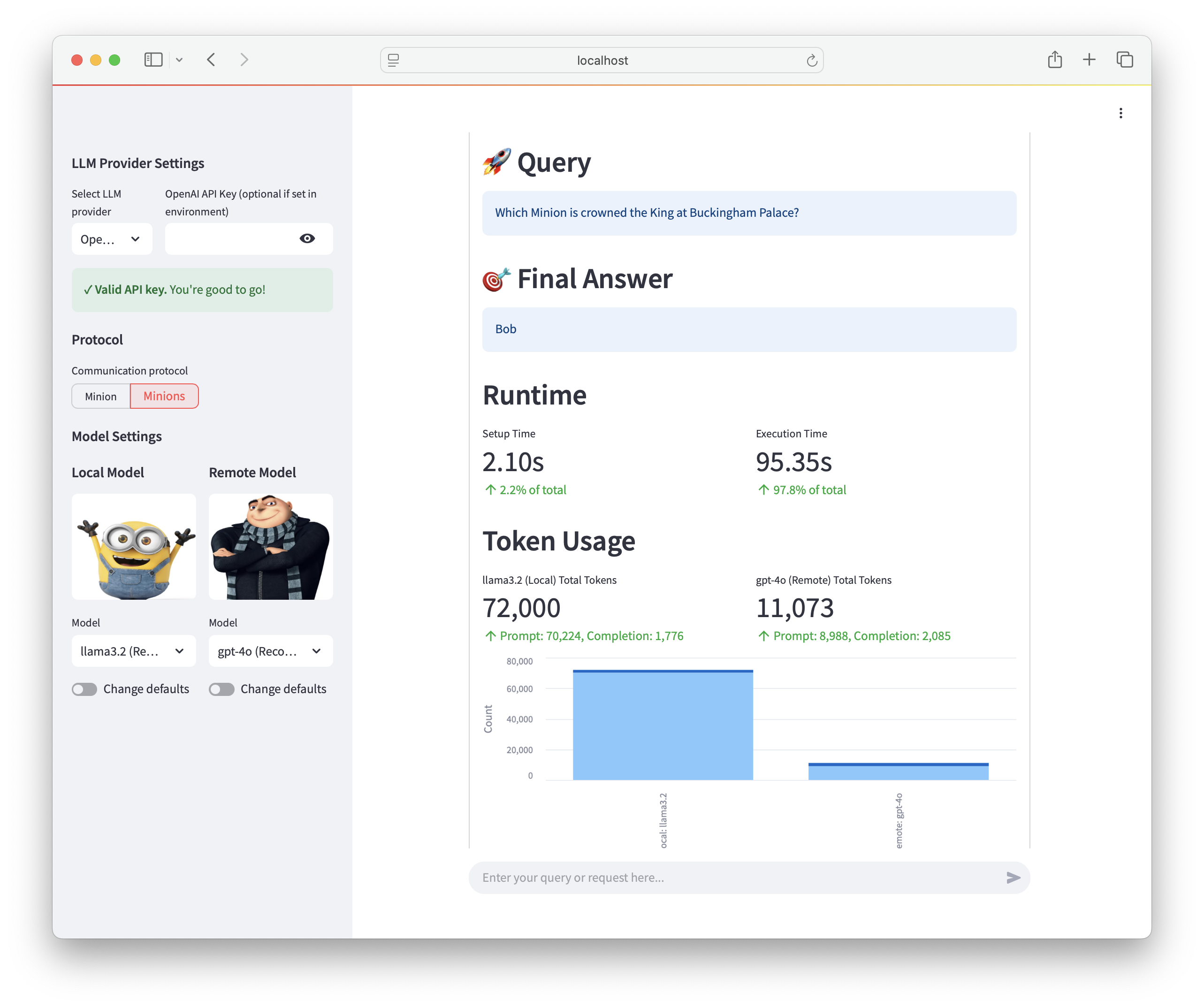

Running the demo app

The provided streamlit app runs an interactive demo of both the Minion and MinionS protocols. To start it, run:

streamlit run app.py

A browser window will open with instructions on entering your OpenAI API key, selecting a local model, and running either Minion or MinionS:

Example code

To run Minion or MinionS programmatically with Python, the minions package can be used.

Minion

First create a file named example.py and add the following contents:

from minions.clients.ollama import OllamaClient

from minions.clients.openai import OpenAIClient

from minions.minion import Minion

local_client = OllamaClient(

model_name="llama3.2",

)

remote_client = OpenAIClient(

model_name="gpt-4o",

)

# Instantiate the Minion object with both clients

minion = Minion(local_client, remote_client)

context = """

Patient John Doe is a 60-year-old male with a history of hypertension. In his latest checkup, his blood pressure was recorded at 160/100 mmHg, and he reported occasional chest discomfort during physical activity.

Recent laboratory results show that his LDL cholesterol level is elevated at 170 mg/dL, while his HDL remains within the normal range at 45 mg/dL. Other metabolic indicators, including fasting glucose and renal function, are unremarkable.

"""

task = "Based on the patient's blood pressure and LDL cholesterol readings in the context, evaluate whether these factors together suggest an increased risk for cardiovascular complications."

# Execute the minion protocol for up to two communication rounds

output = minion(

task=task,

context=[context],

max_rounds=2

)

print(output["final_answer"])

Then run the example:

python example.py

MinionS

With a few modifications, the same code can be used to run the MinionS protocol:

from minions.clients.ollama import OllamaClient

from minions.clients.openai import OpenAIClient

from minions.minions import Minions

from pydantic import BaseModel

class StructuredLocalOutput(BaseModel):

explanation: str

citation: str | None

answer: str | None

local_client = OllamaClient(

model_name="llama3.2",

temperature=0.0,

structured_output_schema=StructuredLocalOutput

)

remote_client = OpenAIClient(

model_name="gpt-4o",

)

# Instantiate the Minion object with both clients

minions = Minions(local_client, remote_client)

context = """

Patient John Doe is a 60-year-old male with a history of hypertension. In his latest checkup, his blood pressure was recorded at 160/100 mmHg, and he reported occasional chest discomfort during physical activity.

Recent laboratory results show that his LDL cholesterol level is elevated at 170 mg/dL, while his HDL remains within the normal range at 45 mg/dL. Other metabolic indicators, including fasting glucose and renal function, are unremarkable.

"""

task = "Based on the patient's blood pressure and LDL cholesterol readings in the context, evaluate whether these factors together suggest an increased risk for cardiovascular complications."

# Execute the minion protocol for up to two communication rounds

output = minions(

task=task,

doc_metadata="Medical Report",

context=[context],

max_rounds=2

)

print(output["final_answer"])

After making the modifications, re-run the example:

python example.py